ACL Findings 2025 - Byun and Choi

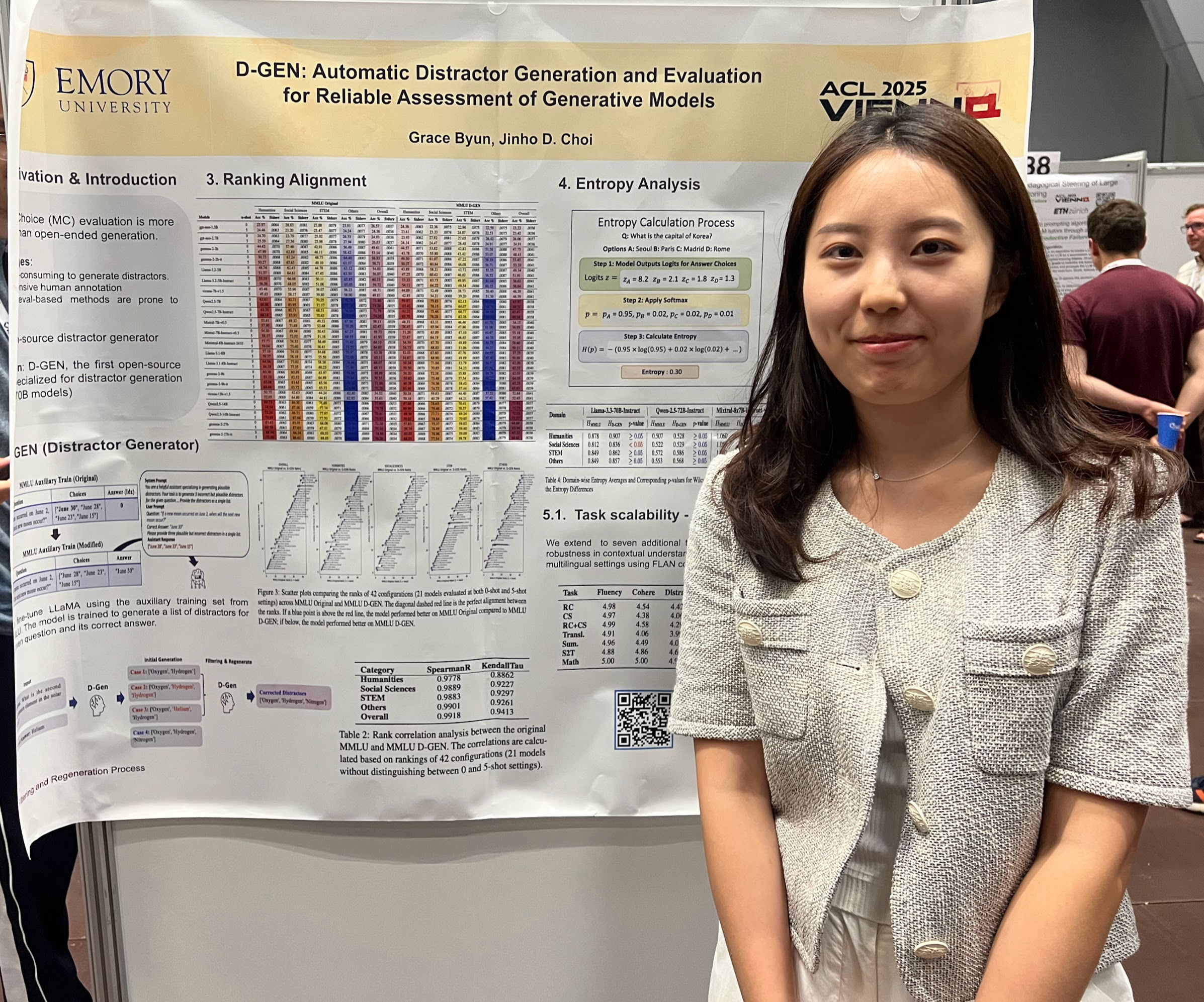

D-GEN: Automatic Distractor Generation and Evaluation for Reliable Assessment of Generative Models

Grace Byun, Jinho D. Choi

Abstract

Evaluating generative models with open-endedgeneration is challenging due to inconsisten-cies in response formats. Multiple-choice (MC)evaluation mitigates this issue, but generatinghigh-quality distractors is time-consuming andlabor-intensive. We introduce D-GEN, the firstopen-source distractor generator model thattransforms open-ended data into an MC format.To evaluate distractor quality, we propose twonovel methods: 1) ranking alignment, ensuringgenerated distractors retain the discriminatorypower of ground-truth distractors, and 2) en-tropy analysis, comparing model confidencedistributions. Our results show that D-GENpreserves ranking consistency (Spearman’s ρ0.99, Kendall’s τ 0.94) and closely matches theentropy distribution of ground-truth distractors.Human evaluation further confirms the fluency,coherence, distractiveness, and incorrectness.Our work advances robust and efficient distractor generation with automated evaluation, set-ting a new standard for MC evaluation.

Venue / Year

Proceedings of the Annual Meeting of the Association for Computational Linguistics (ACL): Findings / 2025

Links

Anthology | Paper | Poster | BibTeX | GitHub