TACL 2024 - Finch and Choi

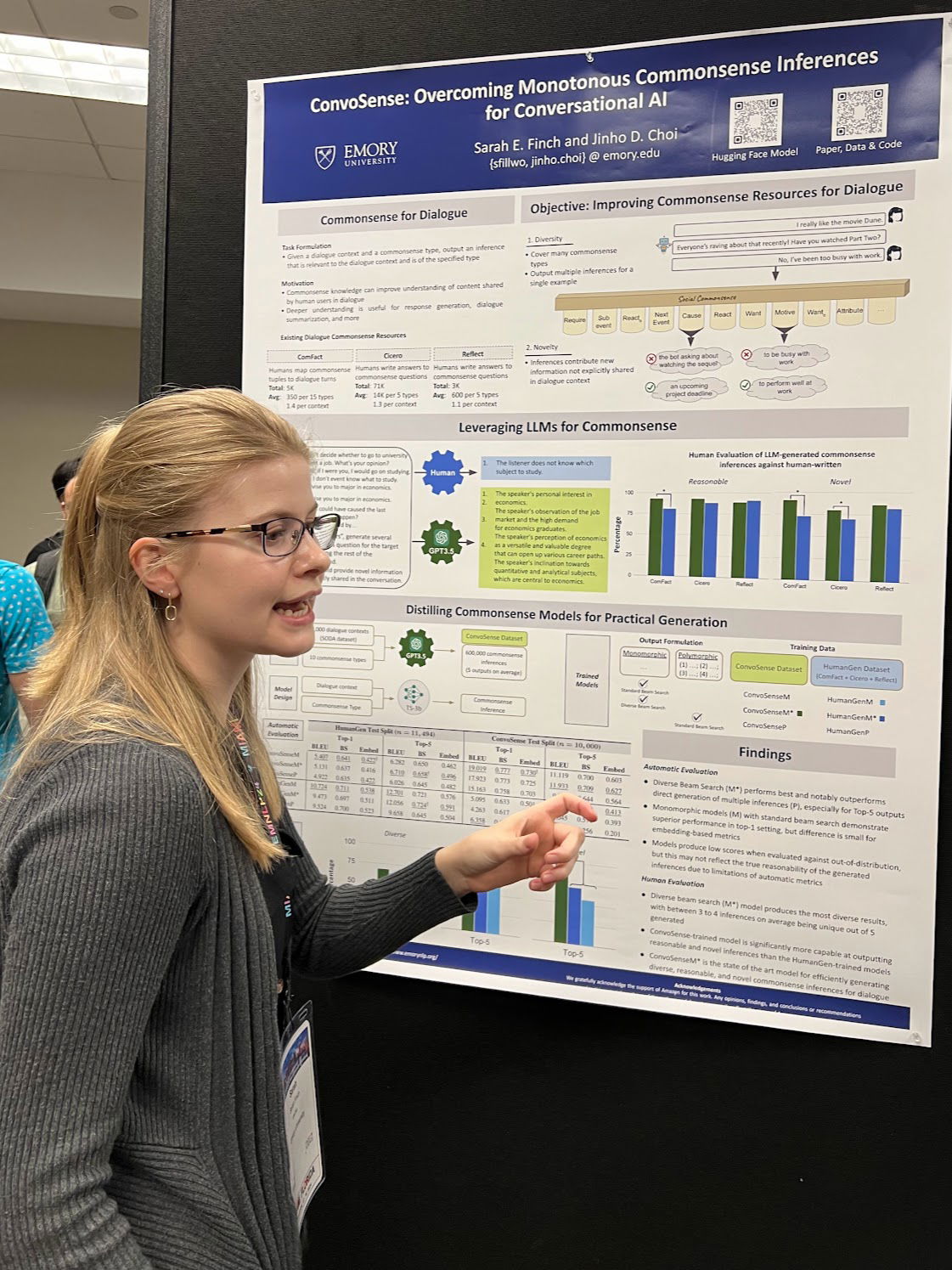

ConvoSense: Overcoming Monotonous Commonsense Inferences for Conversational AI

Sarah E. Finch, Jinho D. Choi

Abstract

Mastering commonsense understanding and reasoning is a pivotal skill essential for conducting engaging conversations. While there have been several attempts to create datasets that facilitate commonsense inferences in dialogue contexts, existing datasets tend to lack in-depth details, restate information already present in the conversation, and often fail to capture the multifaceted nature of commonsense reasoning. In response to these limitations, we compile a new synthetic dataset for commonsense reasoning in dialogue contexts using GPT, ConvoSense, that boasts greater contextual novelty, offers a higher volume of inferences per example, and substantially enriches the detail conveyed by the inferences.Our dataset contains over 500,000 inferences across 10,000 dialogues with 10 popular inference types, which empowers the training of generative commonsense models for dialogue that are superior in producing plausible inferences with high novelty when compared to models trained on the previous datasets. To the best of our knowledge, it is the first of its kind that provides such a multitude of novel inferences at such a large scale.

Venue / Year

Transactions of the Association for Computational Linguistics (TACL) / 2024

Links

Anthology | Paper | BibTeX | GitHub